Apart from various fields mentioned in Evaluation / ATA displayed on the evaluation form, there are several other elements that are designed to assist the users like calculated scores, spelling accuracy, and pre-answered questions.

Evaluation Form

Let’s understand each element in detail.

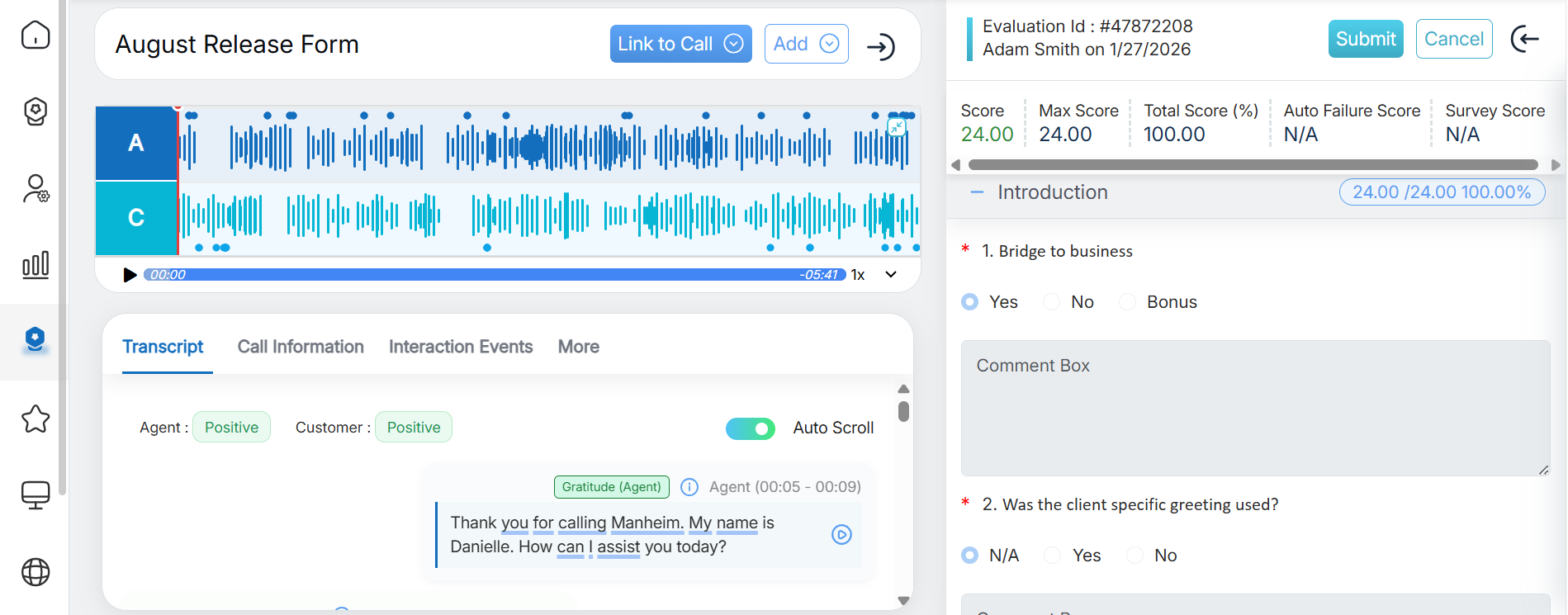

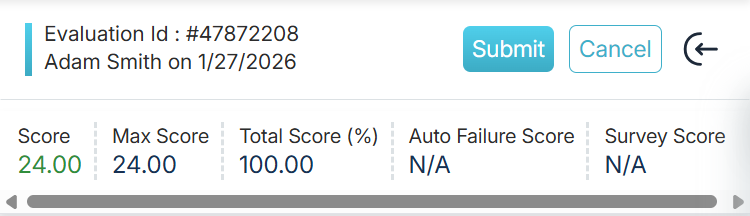

Score bar

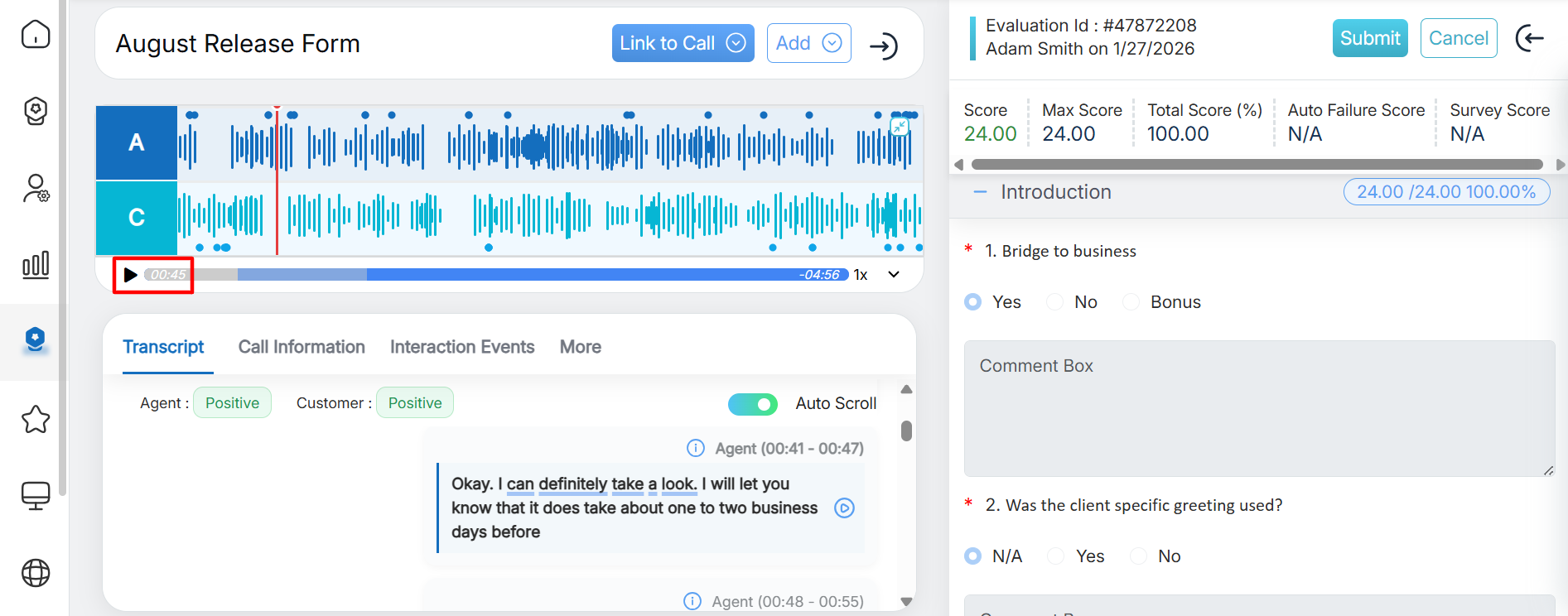

Every evaluation form includes a score bar displayed at the top right corner of the form. It is designed and formulated to display scores for individual agents based on the evaluated scores provided by the QA Verifiers.

Score Bar

The following scores will be displayed in the score bar in every evaluation form.

- Score: This section displays the score obtained by an agent during evaluation.

- Max Score: The highest score available for a question. For Example: If the options are A–10, B–20, C–30, and D–40, then the Max Score is 40.

- Total Score(%): It shows the total percentage obtained by an agent. The value of this field is determined as (Score/Max Score)*100.

- Auto Failure Score: If any question of a category is set to Autofail then this field would display the score in zero (0). Further, if the category has been kept free from Autofail then the Failed section displays the status as N/A.

- Survey Score: Displays the score provided by the customer in the post-call survey.

Note: The scores will be calculated only if the categories in Create Evaluation are scorable.

The score bar calculates and displays the overall score of the user obtained from various categories of the screen as shown in the figure:

Score Bar

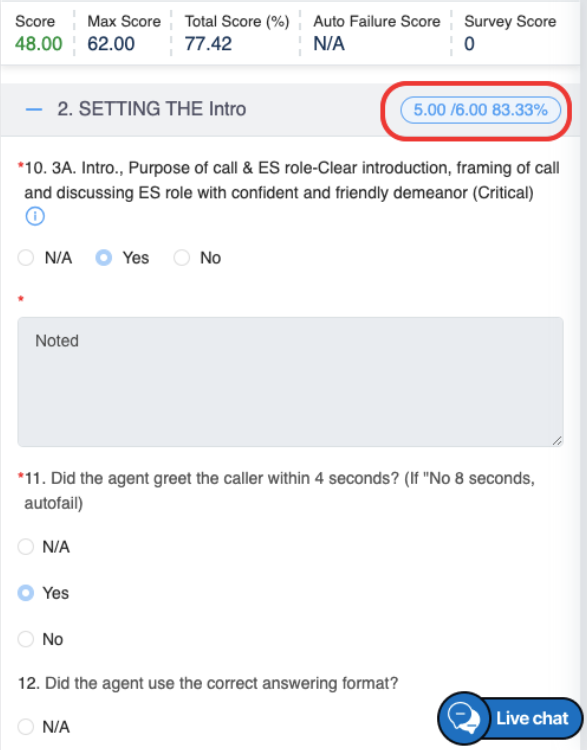

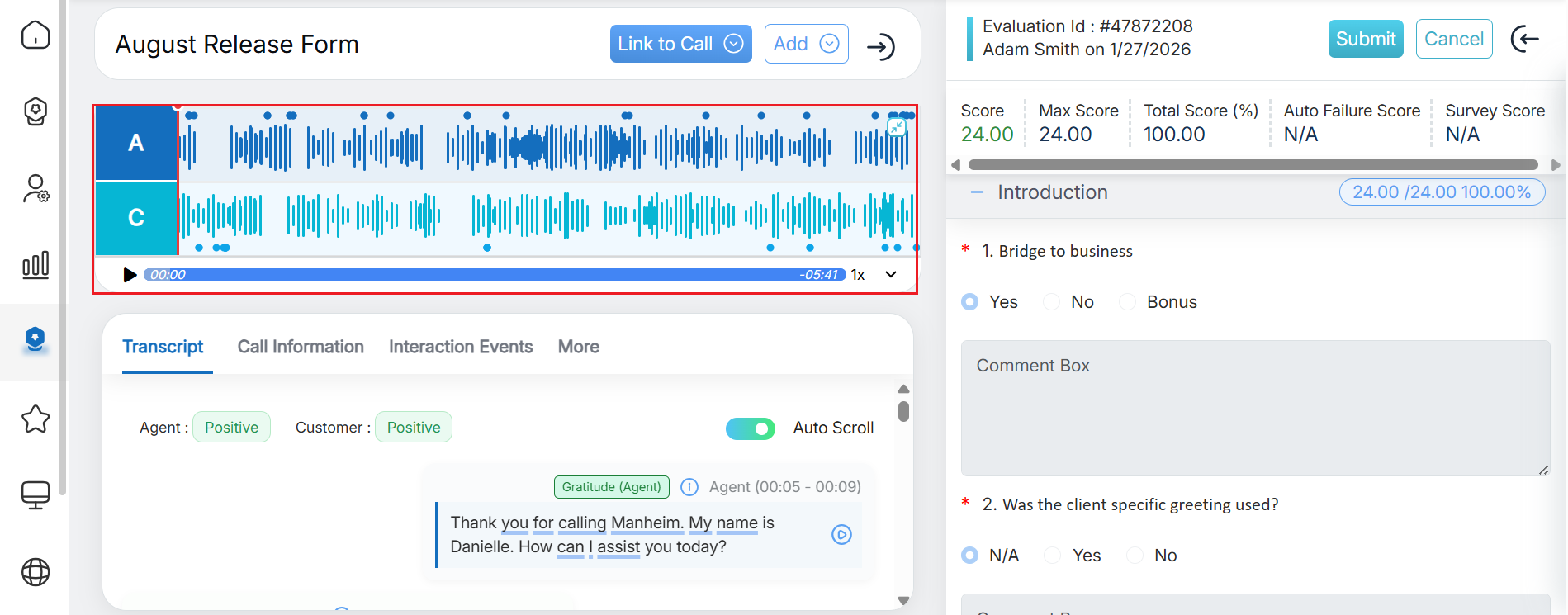

Call Play Module

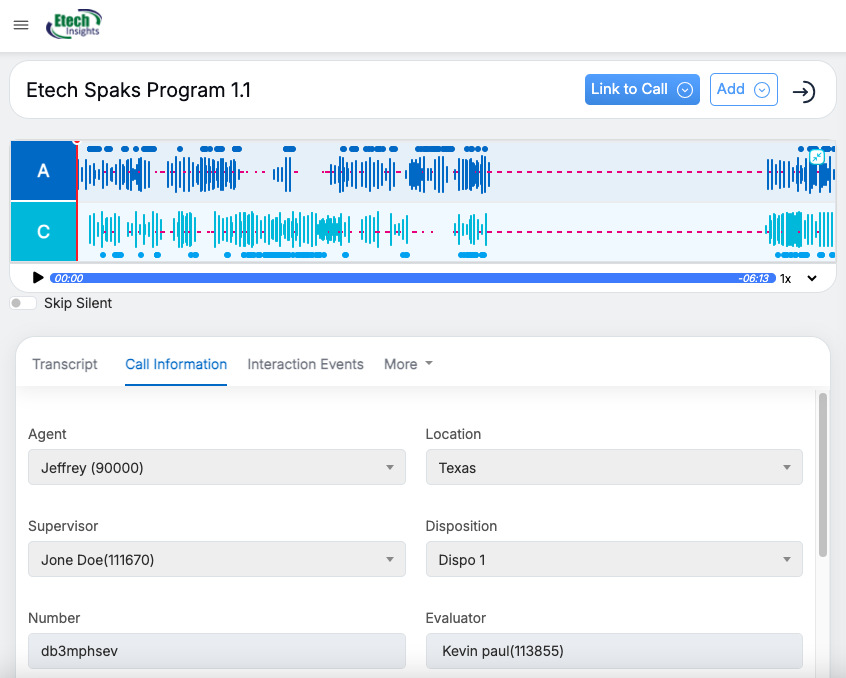

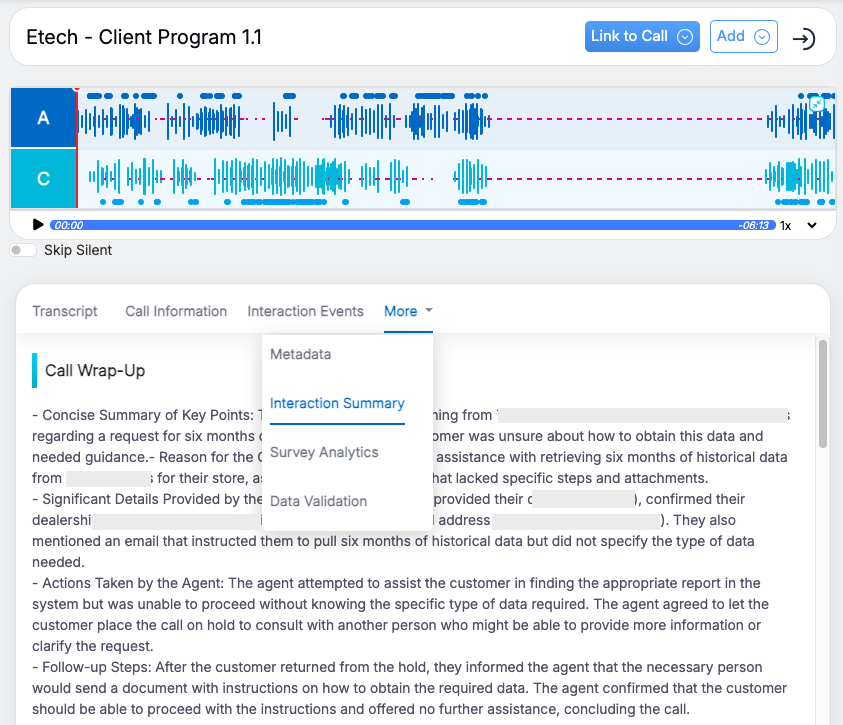

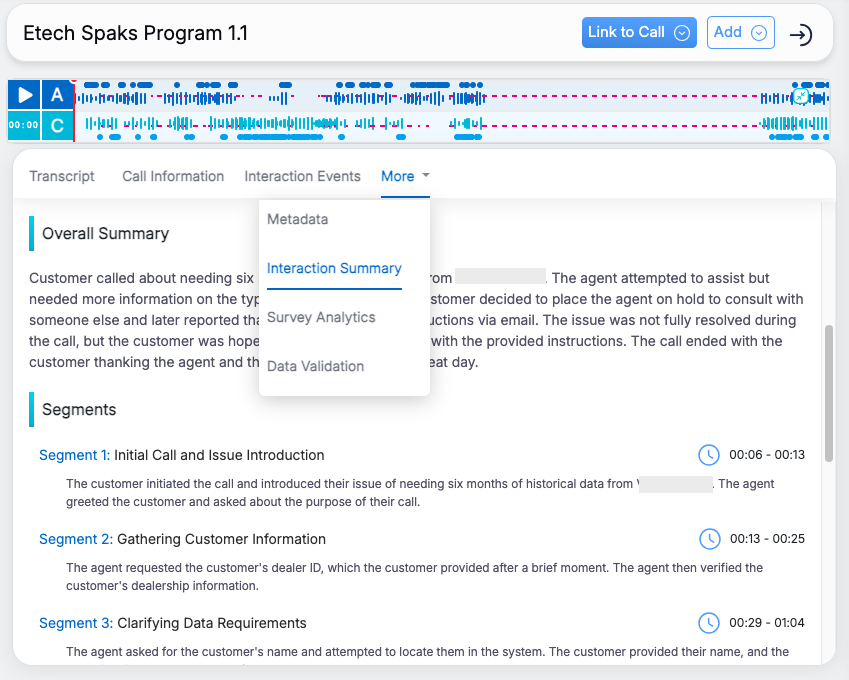

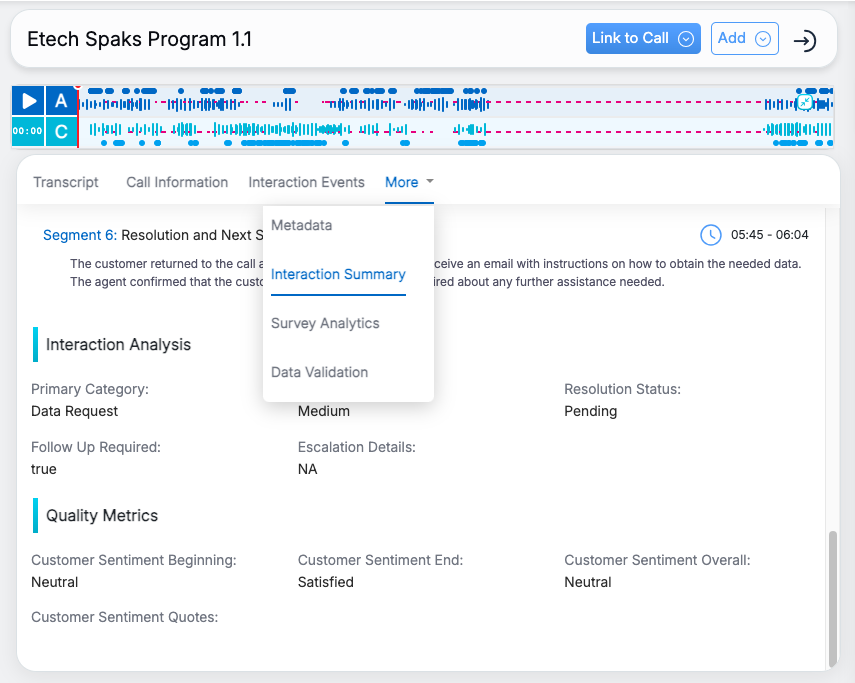

The Call Play information will allow the authorized user to listen the call recording and view the transcript, interaction events, interaction summary and metadata of that specific evaluation.

Call Information section allows the user to listen to the evaluated call.

Player Elements

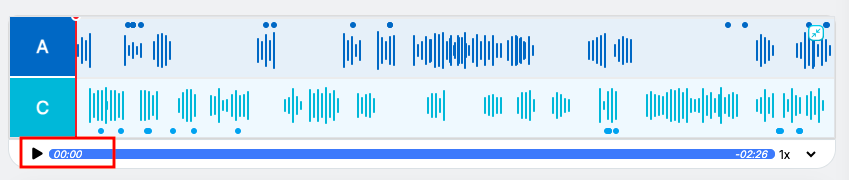

Once the required call recording is attached, you can use the highlighted Play/Pause button to control playback.

You can also adjust the system volume to increase or decrease the audio level as needed.

Speed:

Users can adjust the playback speed of the recording using the dropdown next to “1x” on the player.

Available speed options include 1x, 1.5x, 2x, 2.5x, 3x, and more, allowing faster or slower playback as needed.

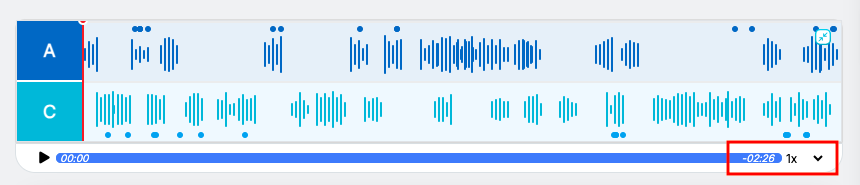

Mini Call Player:

When the user scrolls down to view the evaluation form, a mini call player automatically appears, featuring a play/pause button and a continuously running call duration. This functionality allows the user to monitor the exact timestamp of the conversation in real time, making it easier to pinpoint when specific statements or interactions took place. It ensures smooth playback control and precise timestamp tracking without the need to navigate away from the evaluation form.

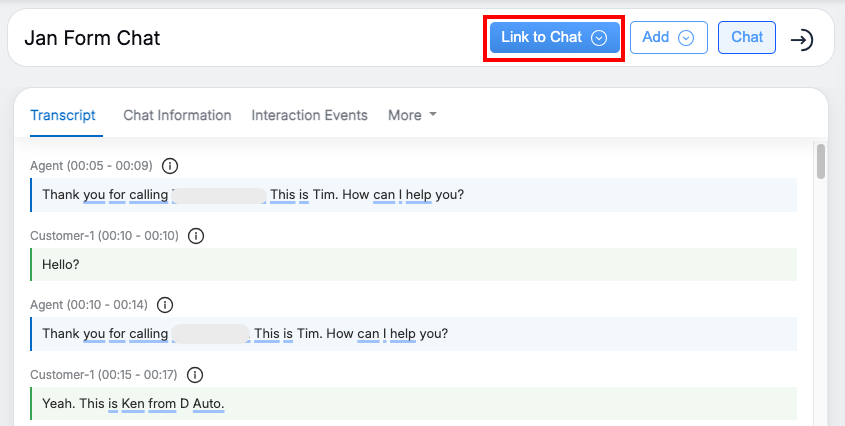

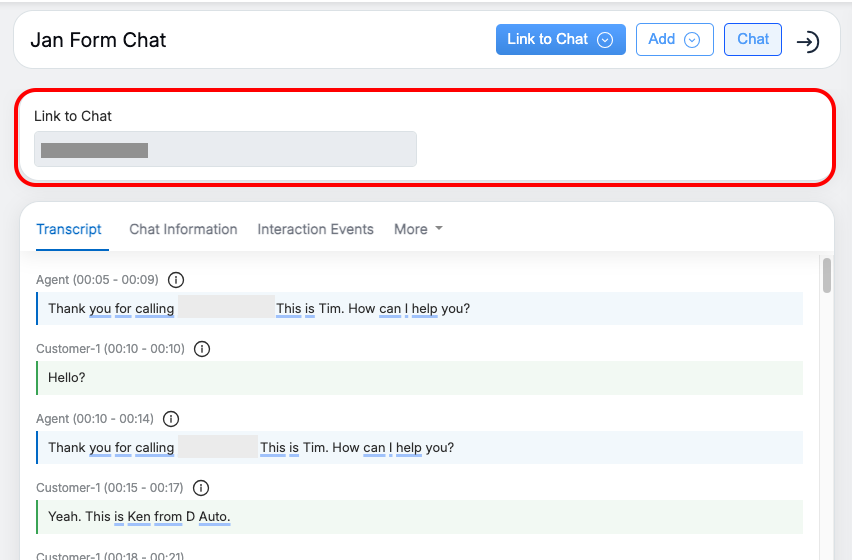

Chat form

When the form type is Chat, the Link to Chat feature is available to add a chat link. Users can click Link to Chat to open a field where they can paste the chat URL, and the system automatically converts it into a transcript, as shown below.

Click Link to Chat as shown below.

This opens a field where you can paste the chat link, and the system automatically converts it into a transcript, as shown below.

AI Summary

In this section the uploaded call information will be available where the user will be able to view the mentioned below details:

-

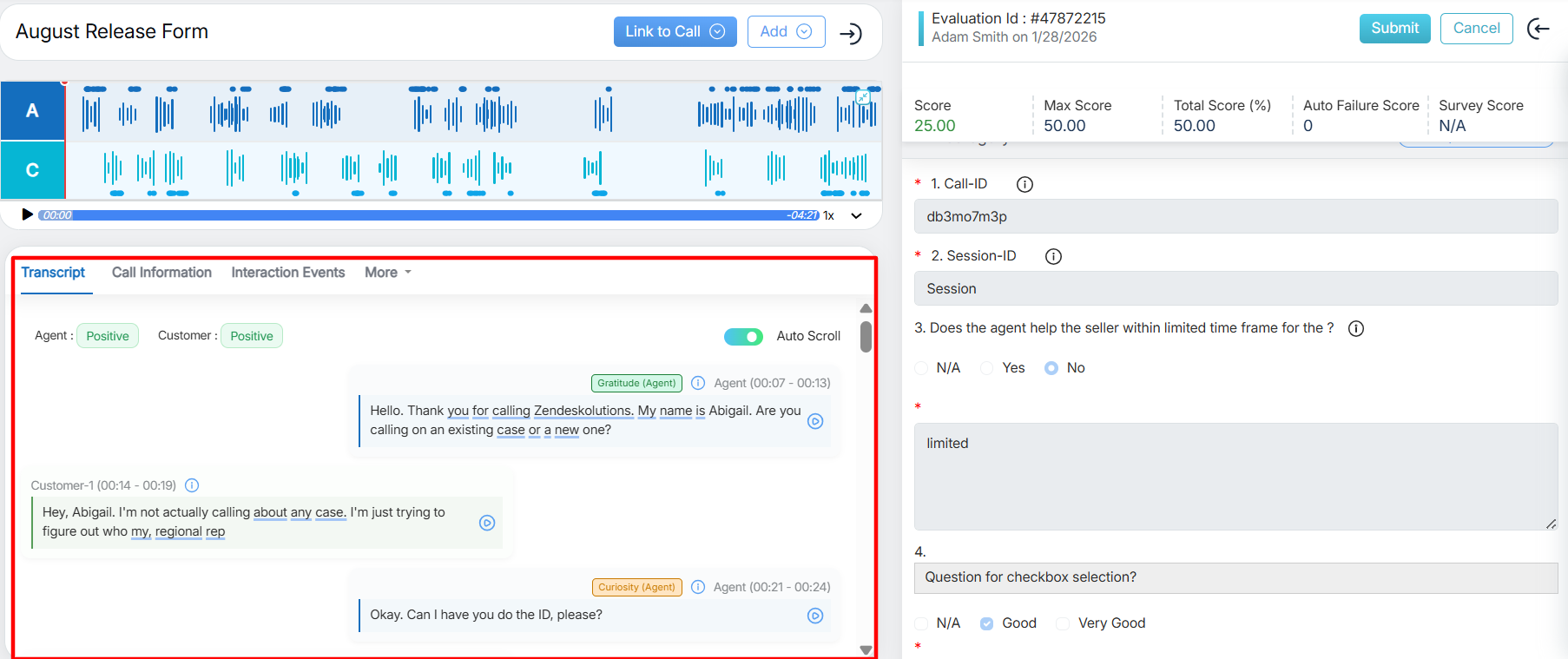

Transcript:

The call transcript, displayed in the highlighted section, provides a detailed, time-stamped record of the conversation captured during the call. The transcript uses a chat-style layout that clearly differentiates Agent and Customer messages, making conversations easier to read and review. Each message includes the speaker role and precise timestamps, enabling quick identification of participants and efficient call navigation. The streamlined visual design reduces clutter and improves scannability, especially during long or fast-paced interactions.

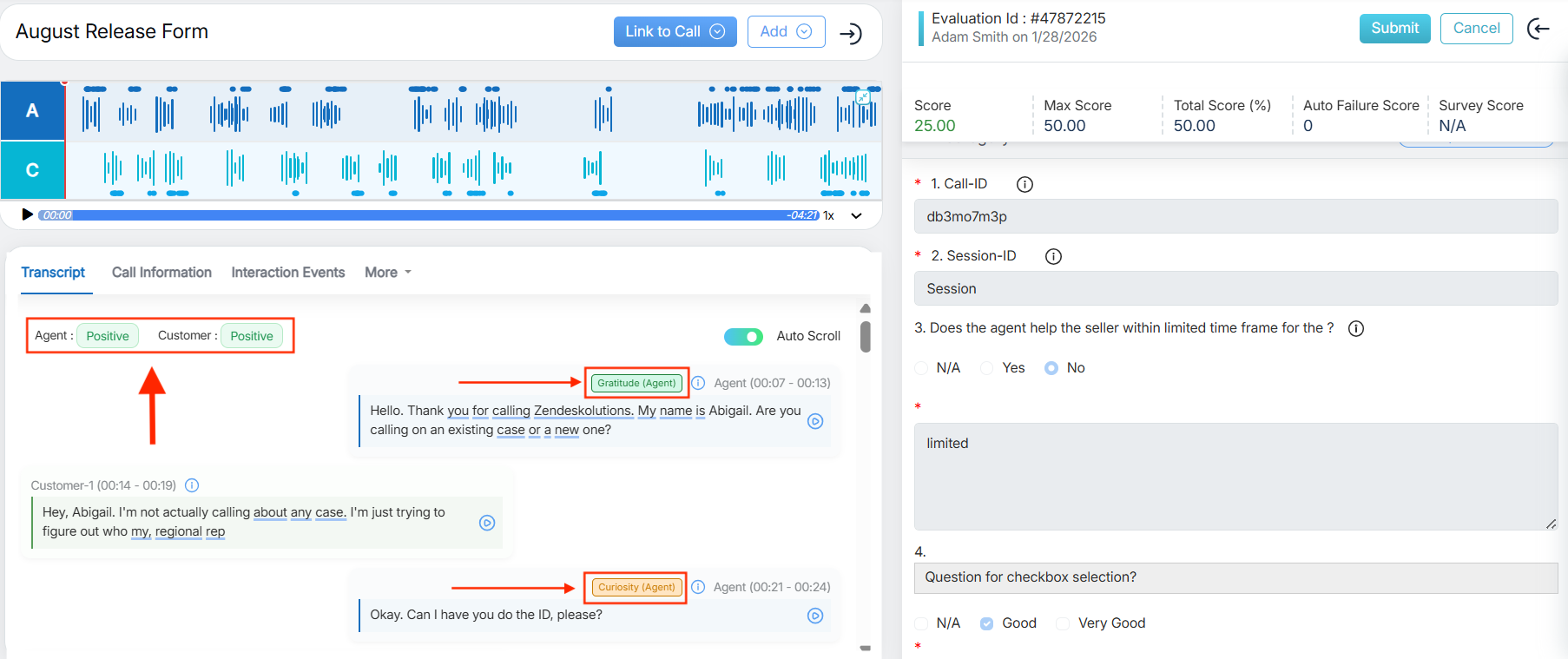

- Sentiment Tags

Sentiment insights are integrated directly into the transcript for better context. Overall sentiment for both the Agent and Customer is displayed at the top, while sentiment tags at the message level highlight the emotional tone of individual snippets. This allows evaluators to quickly identify positive, neutral, or negative moments and improves accuracy during evaluations and quality checks.

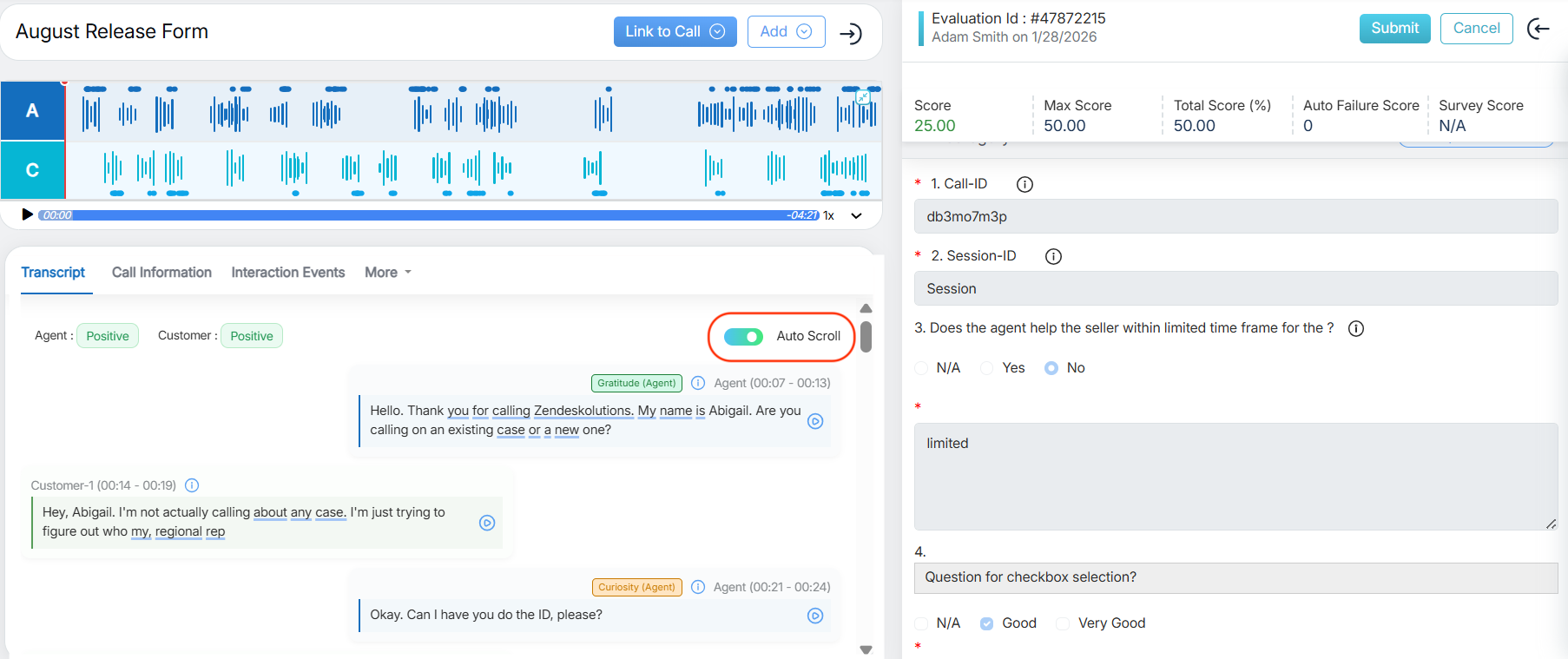

- Auto Scroll: When call playback starts, the transcript synchronizes with the audio in real time and automatically scrolls to follow the current playback position; seeking, pausing, or resuming the call updates the transcript immediately.

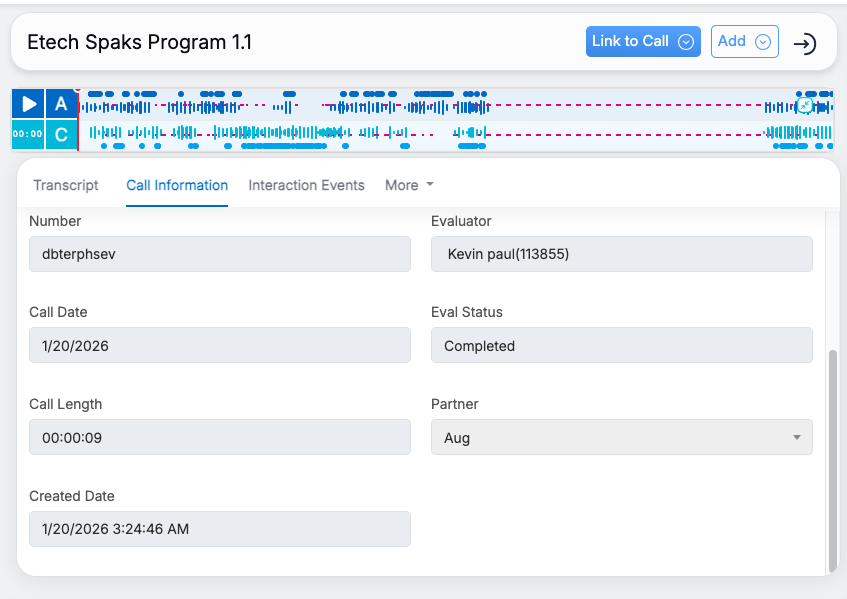

- Call Information: The Call Information tab provides a quick overview of key call details, including agent and supervisor names, disposition, location, evaluator name, evaluation status, call length, call type, call date and partner name. It helps users access essential call data and evaluation context at a glance.

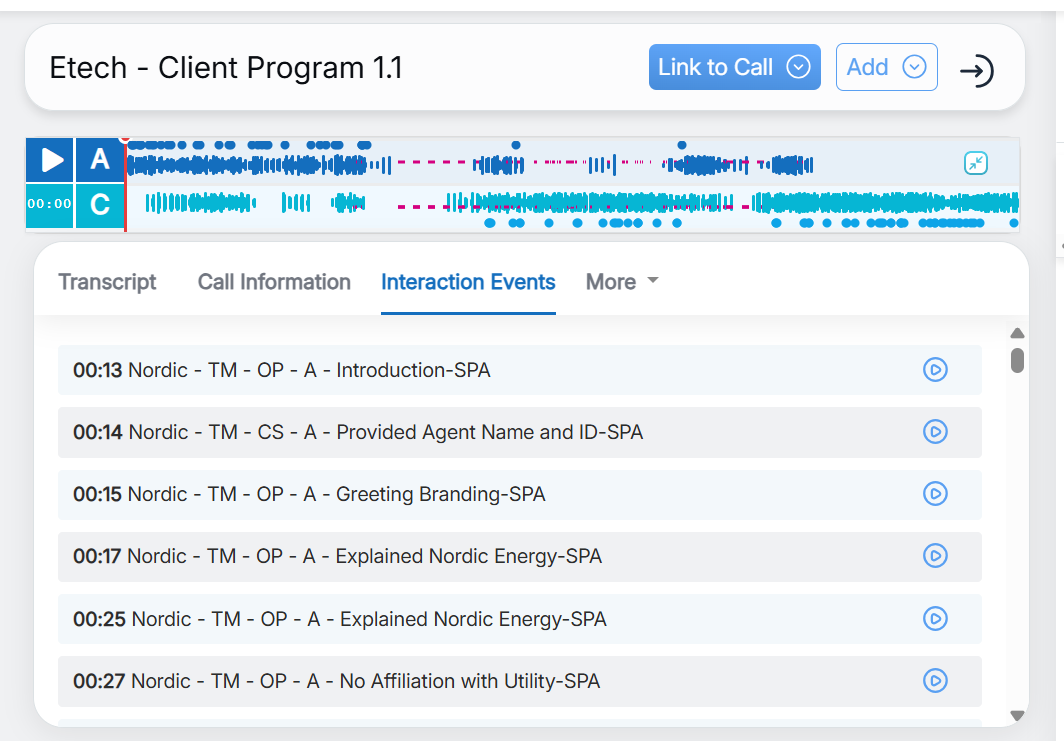

- Interaction Events: The Interaction Events tab displays a chronological list of key events and actions that occurred during the call for detailed evaluation insights.

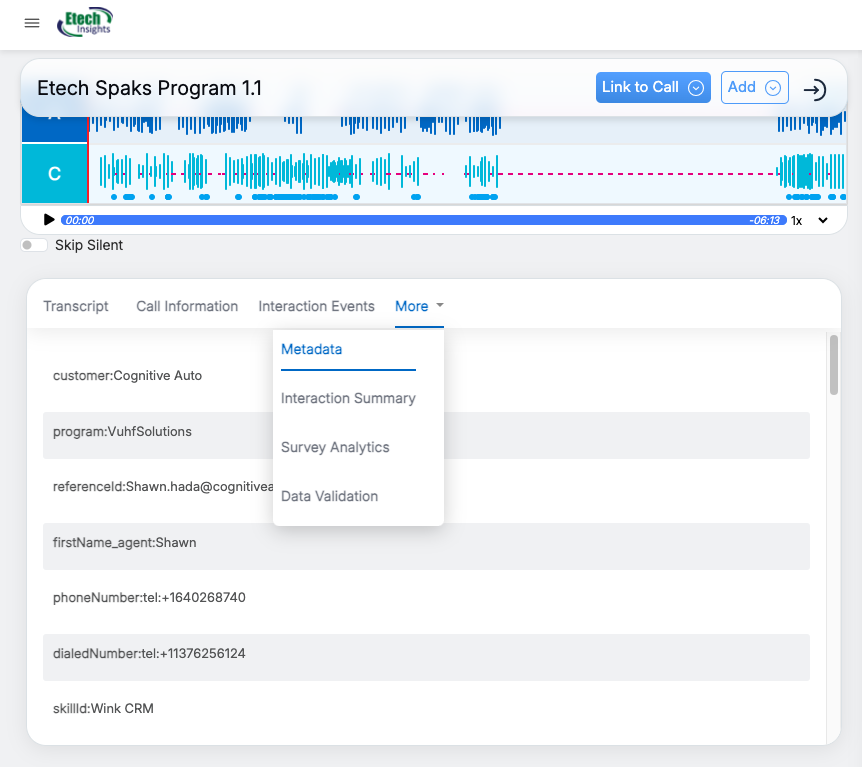

- Meta Data: The Metadata tab displays key technical and contextual information about a call — such as the country, state, customer, and program. This information helps evaluators understand the background and structure of the call for accurate analysis.

Note: The Metadata tab is available only for AI-ingested calls. For manually evaluated calls, this tab will not display any data, as the details are fixed and depend on the information received through the AI-ingested call process.

- Interaction Summary: The Interaction Summary tab provides a detailed overview of the call, featuring evaluation questions alongside AI-generated responses derived from the call content. This helps evaluators quickly assess call quality and agent performance with contextual insights. User can scroll down to access summary details.

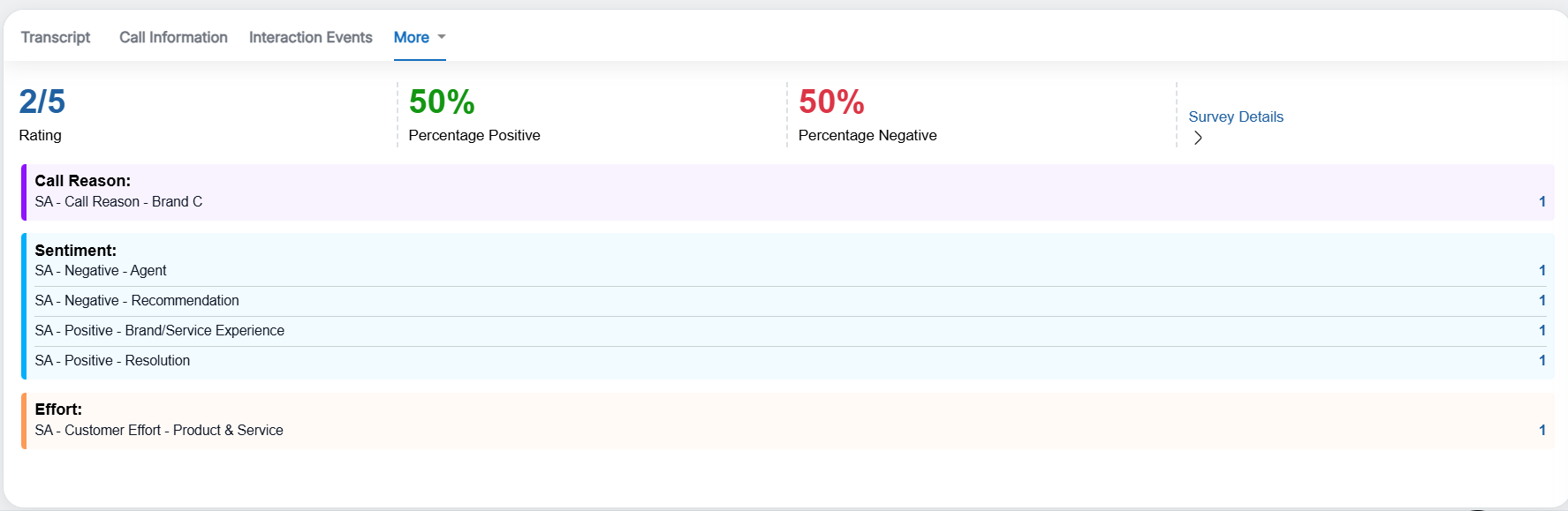

- Survey Analytics: The Survey Analytics tab on the Call Evaluation page provides a comprehensive overview of survey-based insights when surveys are enabled for the selected campaign. It highlights key metrics such as ratings, sentiments, reasons, and effort categories to support deeper analysis.

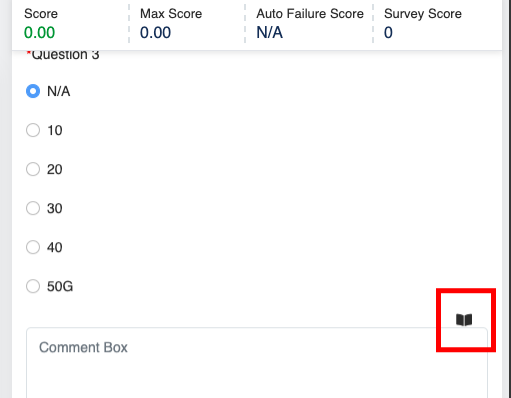

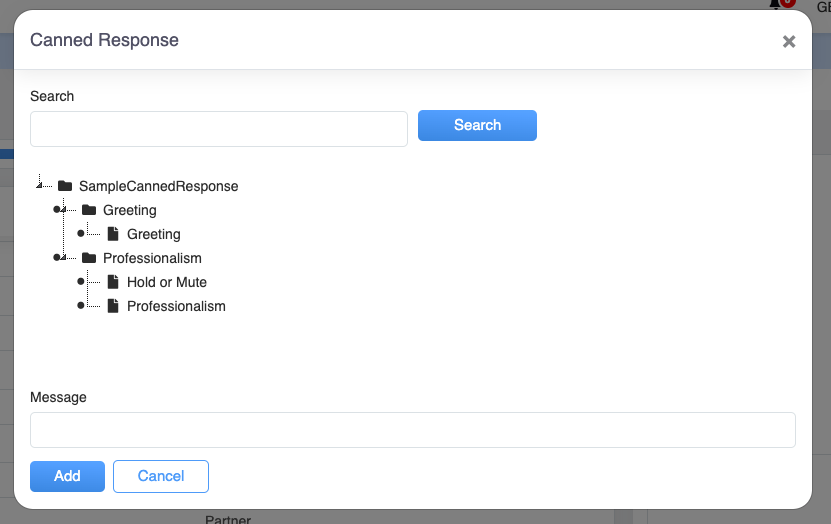

Canned Response

A Canned Response is a ready-made collection of questions or answers. This feature lets users quickly pick questions from the repository instead of typing them out manually. The Admin can add or update questions in the canned responses whenever needed.

This feature is represented by the highlighted icon shown below.

Clicking the Canned Response icon will open up a Canned Response window which is shown as follows:

The user can select the question based on the set categories. When the question is selected from the screen, the pre-set answer gets visible on the message box located below in the window as shown:

For the selected question 1 in the box above on the screen, the relative answer is displayed in the message box below in the window.

Click  button to add the answer to the Create Evaluation screen.

button to add the answer to the Create Evaluation screen.

To know more about Canned Response, refer to Canned Responses.

Video